I am passionate about creating impact through research and technology and at present, I am working on intelligent e-commerce search at Tonita. Previously, I was a graduate student in Computational Science and Engineering at Harvard, advised by Dr. Weiwei Pan and Prof. Finale Doshi-Velez. I was also nominated for the Forbes 30 Under 30 - Boston, named the Adobe Research Women-In-Technology Scholar and a Machine Learning Alignment Scholar by the Stanford Existential Risks Initiative. My research interests include natural language processing, machine learning interpretability and explainability. Prior to this, I was fortunate to be advised by Prof. Vijay Natarajan at the Indian Institute of Science, where I worked on developing parallel algorithms for computational topology and geometry.

I enjoy conflating research with social causes that make a difference. In 2022, as part of MIT's Brave Behind Bars course, I taught Computer Science and mentored incarcerated individuals in New England prisons, which was featured in the Washington Post. I've enjoyed volunteering at the Harvard Square Homeless Shelter and volunteering backend help for the Humans of AI Podcast. In 2020, I founded my own podcast to drive the conversation on gender inequity and why women belong at the table.

Outside of research, I love writing, hiking and running and am training to complete a half-marathon one day. I also marvel at music and chess. Some of my work is listed below. Click on the links to see more details.

- 2024: I have resumed writing. Stay tuned for more.

- 2024: I am serving as an Ethics Reviewer at ICML 2024.

- 2024: Our paper "Identifying the Risk of Diabetic Retinopathy Progression Using Machine Learning on Ultrawide Field Retinal Images" was accepted for publication at the International Workshop on Health Intelligence, AAAI Conference on Artificial Intelligence 2024. This work was previously presented at the American Diabetes Association’s 83rd Scientific Sessions and the MIT-MGB AI Cures Conference.

- 2023: Excited to join Tonita and contribute to the vision of intelligent e-commerce search.

- 2023: I was nominated for the 2023 Forbes 30 Under 30 – Boston.

- 2023: Our paper "Why do universal adversarial attacks work on large language models?: Geometry might be the answer" was accepted to the New Frontiers in Adversarial Machine Learning Workshop, ICML.

- 2023: I defended my thesis and graduated from Harvard! [Slides]

- 2023: Spotlight Talk on "Why do universal adversarial attacks work on LLMs?" at the New England NLP Meeting Series.

- 2023: Research Seminar on "GPU Parallel Computation of Morse-Smale Complexes" at Flagship Pioneering.

- 2023: I was a course developer and Teaching Fellow for CS 181: Introduction to Machine Learning (Spring 2023) at Harvard.

- 2023: Our paper "Tachyon: Efficient Shared Memory Parallel Computation of Extremum Graphs" was accepted for publication at the Computer Graphics Forum.

- 2023: Lightning Talk, "What makes a good explanation?" at the Women in Data Science (WiDS) Conference, Cambridge.

- 2023: My research was adapted as a graduate machine learning course CS6216: Advanced Topics in Machine Learning (Spring 2023) at the National University of Singapore (NUS).

- 2022: Spotlight Talk, "What makes a good explanation?" at the Trustworthy Embodied AI Workshop, NeurIPS 2022.

- 2022: I was selected as a Machine Learning Alignment Scholar and awarded $6000 by the Stanford Existential Risks Initiative to conduct research on large language models.

- 2022: I was selected to represent Harvard University at the Grace Hopper Celebration.

- 2022: I interned with the Deep Learning Performance team at NVIDIA during the summer.

- 2022: As part of MIT's Brave Behind Bars course, I taught Computer Science and mentored incarcerated individuals in New England prisons, which was featured in the Washington Post.

- 2022: I was a Panelist at Harvard's IACS Research & Thesis Panel and IACS Graduate Admissions Information Panel.

- 2022: I was selected as an Adobe Research Women-In-Technology Scholar (one among 16 across the United States awarded a prize of $10,000) and featured by Harvard University.

- 2022: Our paper "What makes a good explanation?: A Harmonized View of Properties of Explanations" was accepted to the Trustworthy and Socially Responsible Machine Learning Workshop, NeurIPS.

- 2022: Our paper "GPU Parallel Computation of Morse-Smale Complexes", previously published and presented at the IEEE VIS Conference 2020, was accepted for publication at the IEEE Transactions on Visualization and Computer Graphics.

- 2021: I was selected as a Google CS Mentorship Program Scholar.

- 2021: I was a Teaching Fellow for CS50: Introduction to Computer Science (Fall 2021) at Harvard.

- 2021: Women in High Performance Computing (WHPC) Lightning Talk at the Supercomputing Conference.

- 2021: Invited Speaker, "GPU Parallel Computation of Morse-Smale Complexes", ACM ARCS Symposium 2021. [Slides] [Poster]

- 2020: Introducing the She Belongs Podcast, to drive conversation around gender inequality and why women belong at the table.

- 2020: I volunteered with the backend operations of the Humans of AI: Stories, Not Stats Podcast.

- Varshini Subhash*, Anna Bialas*, Weiwei Pan, Finale Doshi-Velez, “Why do universal adversarial attacks work on large language models?: Geometry might be the answer”, New Frontiers in Adversarial Machine Learning Workshop, ICML 2023. [arXiv]

- Amber Nigam*, Jie Sun*, Varshini Subhash, Paolo Antonio S. Silva, MD, “Identifying the Risk of Diabetic Retinopathy Progression Using Machine Learning on Ultrawide Field Retinal Images”, Accepted Abstract at American Diabetes Association’s 83rd Scientific Sessions, 2023.

- Zixi Chen*, Varshini Subhash*, Marton Havasi, Weiwei Pan, Finale Doshi-Velez, “What Makes a Good Explanation?: A Harmonized View of Properties of Explanations”, Trustworthy and Socially Responsible Machine Learning Workshop, NeurIPS 2022. [arXiv]

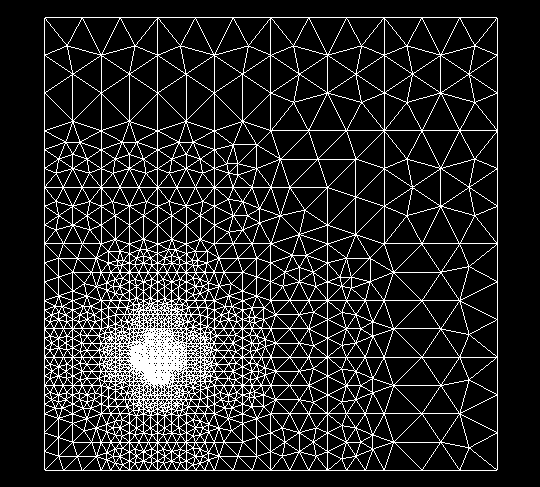

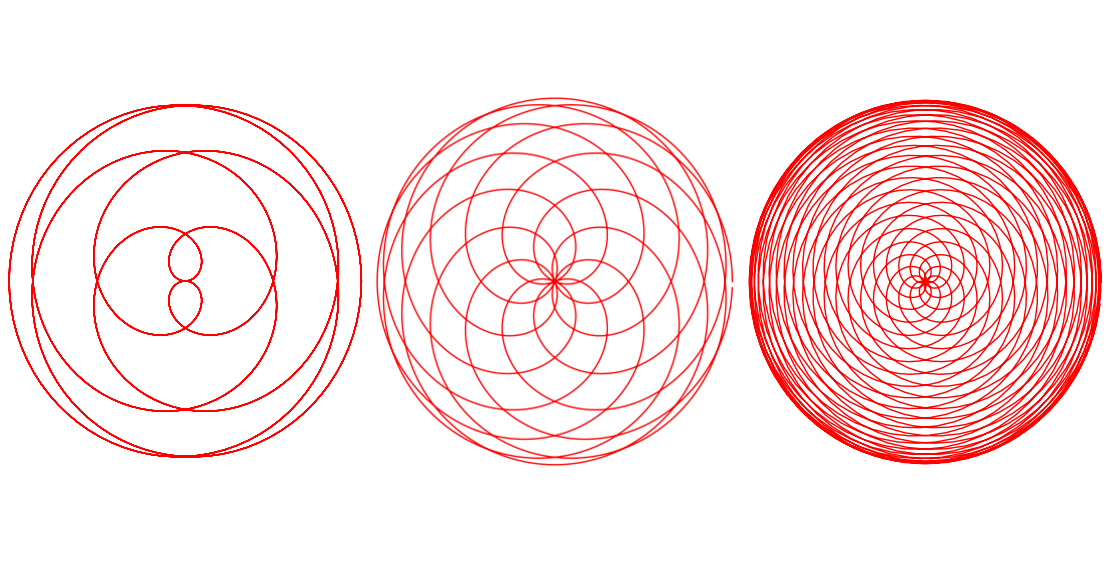

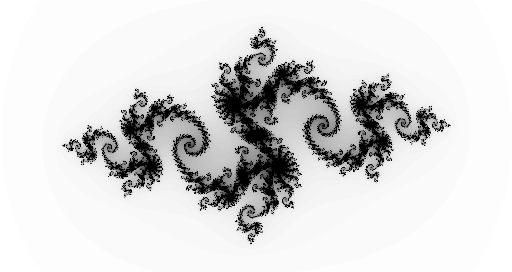

- Varshini Subhash , Karran Pandey, Vijay Natarajan, “GPU Parallel Algorithm for Computing Morse-Smale Complexes”, IEEE Transactions on Visualization and Computer Graphics | IEEE VIS Conference 2020. [IEEE Xplore]

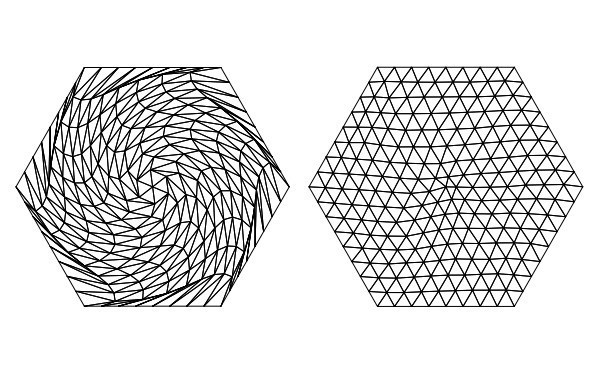

- Abhijath Ande, Varshini Subhash, Vijay Natarajan, “Tachyon: Efficient Shared Memory Parallel Computation of Extremum Graphs”. [Computer Graphics Forum 2023]

- Varshini Subhash, “Can Large Language Models Change User Preference Adversarially?”, [arXiv]

Please feel free to reach out to me at: varshinisubhash [at] g [dot] harvard [dot] edu